This project has gone on for a long time. Things in my life have made me put this project aside many times but now I am finally getting around to it again. Hopefully I will post more updates soon but until then here is a clip from today of K9 up and running.

Games Without Frontiers

Software, Technology & SCIFI by William Reichardt

Thursday, October 24, 2024

It's Been a While - Where is K9 Today?

Friday, January 2, 2015

Javascript for Automation of Messages In Yosemite

I have just spent a few hours trying to do something that should be very simple and was not, so I thought I would record my lessons learned in a post. With the release of OS X, Yosemite, Javascript is supposed to be a viable alternative scripting language for controlling applications. I have been dealing with Applescript for many years and I would never describe our relationship as a good one. I look at Applescript as about as useful at solving problems as Siri. Sure you can talk to it in plain english but it never seems to understand what you are trying to say. Now Javascript, I know very well and can get it to do just about anything so this seemed like great new alternative for me.

I had high hopes for this new integration so I set myself a simple task. Send a text message via the Messages app to an existing contact in my buddies list. Sounds reasonable, right? I had a lot to learn.

In the Script Editor application, all you have to do to send a message in applescript is:

and hit the play button. Keep in mind that over time, this syntax may change so if in the future, this script does not work for you, you will have to do some research on how Messages may have changed.

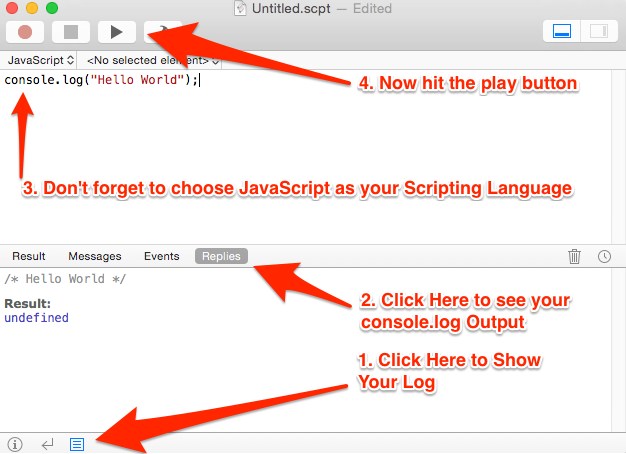

Now how to translate this simple command to JavaScript. The first step is to establish the ability to log things to a console since there is no debugger in Script Editor (for some, unknown reason).

According to the JavaScript for Automation Release Notes I should be able to access the Messages application like this.

So above you can see, I got an application object but it has no JavaScript properties or functions that I can discover. I will have to resort to the Library in the Window>Library>Messages menu to find out what I can do with this object. Here I see this description.

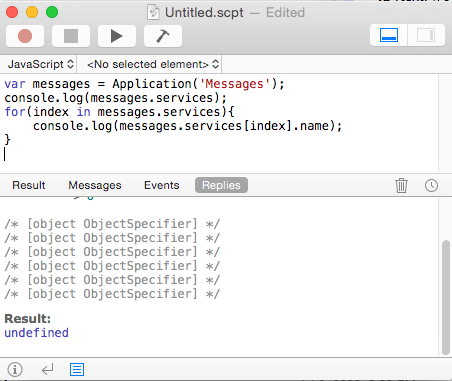

Which says I should have buddies and services properties. I want to send a message to an existing buddy over an existing service. I have multiple services since I use Messages as my primary chat tool. Lets see what services I have.

I have never seen an ArraySpecifier before but I try to treat it like a JavaScript Array and it works. Looks like messages.services is an indexed array of six elements. I can't enumerate the properties of any of the service objects because they are each ObjectSpecifier classes they don't have any properties or functions either. With some digging I discovered that ObjectSpecifier and ArraySpecifier are essentially classes that reference objects within the application. They are not the objects themselves and act as a proxy between you and the object you are trying to manipulate. They do not expose any information about what the object they represent can do, however, so you are dependent on the Library/Dictionary document the application exposes to know what you can do with them. Below is the entry describing a Service.

Services have a property called name which should help me tell one from another. Lets list them.

This was disappointing. I was expecting to see the names of the services but all I got was another ObjectSpecifier. After a little more digging I discovered that if you call the property you want to read as a function ( .name() ), you will get it value instead of its ObjectSpecifier. Now my list looks like this.

I can see that I have lots of services to choose from to send a message. I now know that there is an SMS service I can use. It turns out there is an easier way to get to it as well. The JavaScript for Automation Release Notes document says that ArraySpecifier supports a .byName() function that will use the name() property instead of the array index to search an array for a contained object. Using this we can now get to the SMS service like this.

Now lets try to find out what buddies I have listed under the SMS service. From what I can tell, it is drawing from the buddies it finds in your address book who have mobile numbers listed. I won't show the output (since they are my personal contacts) but here is the script that lists existing buddies.

Now you have a listing of all your buddies that you can reach via SMS in messenger. The property .handle() instead of .name() can be used to display the actual mobile number or account name used on the service. This will be required if the same name appears more than once in the list. I have shortened the script by using the .whose() function which is also documented in the JavaScript for Automation Release Notes to search the Service collection for an object whose .handle() property is the phone number I want to send a text message to. This allows me to eliminate duplicate .name() objects in the list. Note that .whose() returns a ArraySpecifier so we need to take the first element.

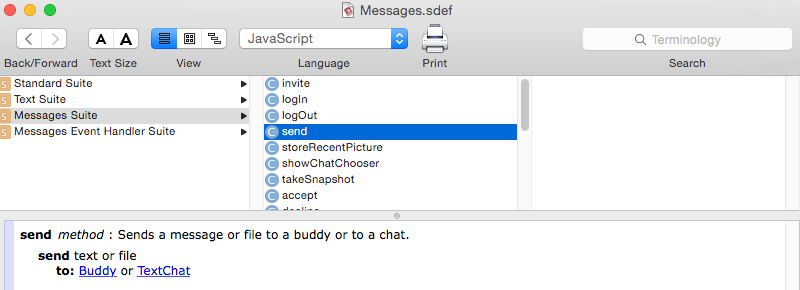

Now, choosing one buddy, we actually will send a message. Here the dictionary entry from Messages is just not very easy to understand. It was not initially obvious to me that I had to call messages.send() with the parameters above but once you see how to read the dictionary, future calls for any app are easy to figure out. Here is the dictionary entry for send().

So what is this trying to tell us? If you have ever worked with objective C you will know that the first argument of a function is actually named after the function itself. After that all the other arguments are named arguments. This translates into JavaScript as .function(,{"secondargument":"value2","thirdArgument":"value3"}) or in this case .send("Text to send",{to:myBuddy}). That is how they model multiple, named parameters when making an Objective C call from javascript. This is what the entry above is trying to say. Note the absence of the : next to send above. This indicates the first parameter (The one outside the {}) is the message text.

So, where did this all start? I was trying to implement this ....

in JavaScript. Right now I am close but notice, I did not have to deal with any buddy list in the AppleScript command above. It just seemed to know to search the .handle() property to find the buddy. How can we simplify this even further based on what was learned so far? I managed to compact it down to a one line command that translates into the AppleScript shown above. Here it is...

It would have been longer if I actually referenced the SMS service. Here I search all buddy handles with a matching phone number and the one I got back already pointed at the SMS service. It would have been longer if I limited it to the SMS service buddies.

After this journey through using JavaScript instead of AppleScript to do a simple task, what did I learn?

I did not want what I went through tonight to go to waste so I hope writing down my observations and the steps I went through to make them can help other people out with whatever they might try and do with JavaScripting OS X applications. There was not much to be found on this topic when I was searching for answers and hopefully, this article will save you some time. If you find a better way to implement this simple command, please post a comment to let me know.

I had high hopes for this new integration so I set myself a simple task. Send a text message via the Messages app to an existing contact in my buddies list. Sounds reasonable, right? I had a lot to learn.

In the Script Editor application, all you have to do to send a message in applescript is:

Now how to translate this simple command to JavaScript. The first step is to establish the ability to log things to a console since there is no debugger in Script Editor (for some, unknown reason).

According to the JavaScript for Automation Release Notes I should be able to access the Messages application like this.

Which says I should have buddies and services properties. I want to send a message to an existing buddy over an existing service. I have multiple services since I use Messages as my primary chat tool. Lets see what services I have.

I have never seen an ArraySpecifier before but I try to treat it like a JavaScript Array and it works. Looks like messages.services is an indexed array of six elements. I can't enumerate the properties of any of the service objects because they are each ObjectSpecifier classes they don't have any properties or functions either. With some digging I discovered that ObjectSpecifier and ArraySpecifier are essentially classes that reference objects within the application. They are not the objects themselves and act as a proxy between you and the object you are trying to manipulate. They do not expose any information about what the object they represent can do, however, so you are dependent on the Library/Dictionary document the application exposes to know what you can do with them. Below is the entry describing a Service.

Services have a property called name which should help me tell one from another. Lets list them.

This was disappointing. I was expecting to see the names of the services but all I got was another ObjectSpecifier. After a little more digging I discovered that if you call the property you want to read as a function ( .name() ), you will get it value instead of its ObjectSpecifier. Now my list looks like this.

I can see that I have lots of services to choose from to send a message. I now know that there is an SMS service I can use. It turns out there is an easier way to get to it as well. The JavaScript for Automation Release Notes document says that ArraySpecifier supports a .byName() function that will use the name() property instead of the array index to search an array for a contained object. Using this we can now get to the SMS service like this.

Now lets try to find out what buddies I have listed under the SMS service. From what I can tell, it is drawing from the buddies it finds in your address book who have mobile numbers listed. I won't show the output (since they are my personal contacts) but here is the script that lists existing buddies.

Now you have a listing of all your buddies that you can reach via SMS in messenger. The property .handle() instead of .name() can be used to display the actual mobile number or account name used on the service. This will be required if the same name appears more than once in the list. I have shortened the script by using the .whose() function which is also documented in the JavaScript for Automation Release Notes to search the Service collection for an object whose .handle() property is the phone number I want to send a text message to. This allows me to eliminate duplicate .name() objects in the list. Note that .whose() returns a ArraySpecifier so we need to take the first element.

Now, choosing one buddy, we actually will send a message. Here the dictionary entry from Messages is just not very easy to understand. It was not initially obvious to me that I had to call messages.send() with the parameters above but once you see how to read the dictionary, future calls for any app are easy to figure out. Here is the dictionary entry for send().

So what is this trying to tell us? If you have ever worked with objective C you will know that the first argument of a function is actually named after the function itself. After that all the other arguments are named arguments. This translates into JavaScript as .function(

So, where did this all start? I was trying to implement this ....

in JavaScript. Right now I am close but notice, I did not have to deal with any buddy list in the AppleScript command above. It just seemed to know to search the .handle() property to find the buddy. How can we simplify this even further based on what was learned so far? I managed to compact it down to a one line command that translates into the AppleScript shown above. Here it is...

It would have been longer if I actually referenced the SMS service. Here I search all buddy handles with a matching phone number and the one I got back already pointed at the SMS service. It would have been longer if I limited it to the SMS service buddies.

After this journey through using JavaScript instead of AppleScript to do a simple task, what did I learn?

- Its very easy to make Script Editor lock up with a spinning beach ball when working in JavaScript. Just ask it to run a function on an ObjectSpecifier that does not exists. After that I just had to force quit it to run any more scripts. This seemed broken to me.

- Even though AppleScript is not intuitive to use, it does create compact, readable code in this case when it finally works, compared to JavaScript.

- The JavaScript implementation of AppleScripting does not go one bit out of its way to appeal to experienced JavaScript users. Nothing you already know will really help you. Objective C knowledge turned out to be more helpful.

I did not want what I went through tonight to go to waste so I hope writing down my observations and the steps I went through to make them can help other people out with whatever they might try and do with JavaScripting OS X applications. There was not much to be found on this topic when I was searching for answers and hopefully, this article will save you some time. If you find a better way to implement this simple command, please post a comment to let me know.

Tuesday, December 23, 2014

Robot Accidents will Happen

Things have been moving slowly forward. For a while there, I ran short on funding and without a constant supply of new parts, progress is slow. The last time I posted, I was talking about installing the head on K9. Well I got the head installed but there was some tragedy that followed the installation. I could not resist road testing him after installing his head and this was a mistake.

There must be a bug in his drive Arduino code that interprets the remote control signals for the wheels because as soon as I turned on my remote control unit, he decided to go into a spin. The failsafe I built into the code which shuts the motors down if a toggle switch on the remote is flipped also failed. Because he has two scooter motors, when he spins it can get very fast and I was not prepared. He spun so fast that when his head collided with a table leg, he broke his newly installed neck. This was a setback and I had to remove the existing neck hinge and start over. I don't think I will include any pictures of the lolling broken head. I have attached a temporary neck joint while I work out a more permanent way to rebuild it.

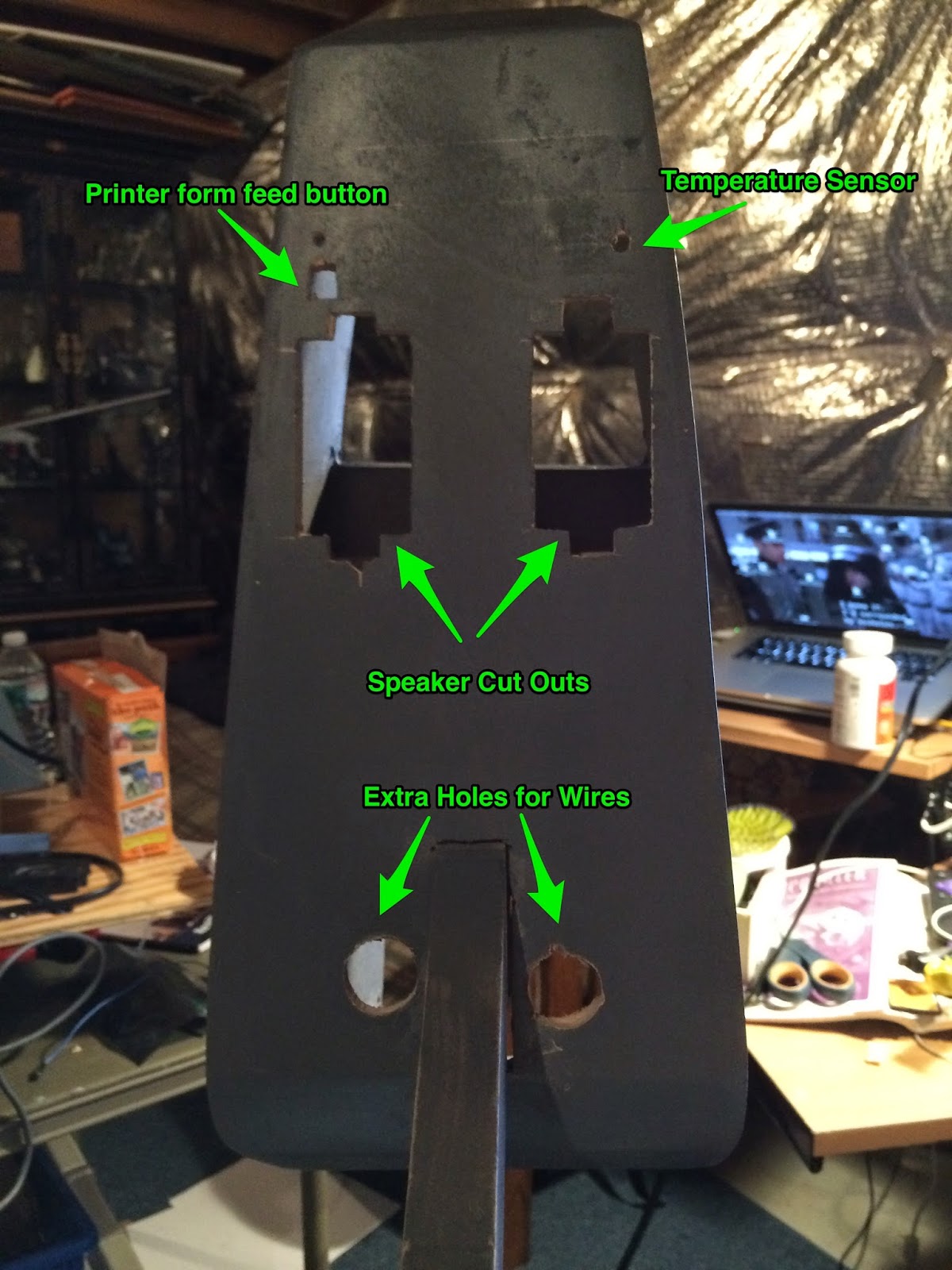

On a more progressive note, I have installed the Thermo Electric Printer into the head. I discovered that this printer is surprisingly versatile after a bit of a rocky start. When I would do anything other than a simple test print, the speakers attached to the Raspberry PI would literally growl and buzz as the printouts came out. If you listen closely during the video below you can hear strange, almost futuristic printing sound during the print process.

This photo does not really do them justice as it makes them look far brighter than they are.

I have made even more progress over the past few months but this entry is getting a little long so I am going to wrap things up. Next up, I will talk about how I relocated the Raspberry PI into the head and installed the electronic antenna and camera into the head as well. For now I will leave you with one more head shot of the completed, telescoping head antenna.

There must be a bug in his drive Arduino code that interprets the remote control signals for the wheels because as soon as I turned on my remote control unit, he decided to go into a spin. The failsafe I built into the code which shuts the motors down if a toggle switch on the remote is flipped also failed. Because he has two scooter motors, when he spins it can get very fast and I was not prepared. He spun so fast that when his head collided with a table leg, he broke his newly installed neck. This was a setback and I had to remove the existing neck hinge and start over. I don't think I will include any pictures of the lolling broken head. I have attached a temporary neck joint while I work out a more permanent way to rebuild it.

On a more progressive note, I have installed the Thermo Electric Printer into the head. I discovered that this printer is surprisingly versatile after a bit of a rocky start. When I would do anything other than a simple test print, the speakers attached to the Raspberry PI would literally growl and buzz as the printouts came out. If you listen closely during the video below you can hear strange, almost futuristic printing sound during the print process.

This cool sound was actually caused by a voltage drop from the Raspberry PI's power supply. I had hooked the printer's power and ground to the PI's +5 volt supply and it could not handle printing directly. When the printer drew too much power from the PI, it would literally stop printing. It also caused the PI to occasionally lock up as well. The solution was, of course, to get power directly from the battery and only drive the serial data from the PI. Here is a shot of the printer installed inside the head.

I had to actually saw of about 1cm from each side of the printer to get it to fit in the space available but fortunately, it was vestigial plastic and the printer still works. Once I got the printer working reliably, I was able to get it to print dithered photographs of faces and I expect I will be able to use it to print out souvenir print-outs with pictures of people's faces taken from the head mounted camera.

Next, I needed to create the red eye lens for the head. I purchased one 12" square sheet of red acrylic from Amazon and I started out using an acrylic cutting tool which turned out to be very slow and painful. I switched to a box cutter and even this was not very efficient. Finally, I just started using an electric Jig Saw and started cutting the stuff like wood. This works great as long as you go slowly. I think the blade actually melts a little as it cuts. Here is a shot of the end result.

In this shot you can also see the Raspberry PI camera which will eventually be mounted behind the red acrylic lens. You can also see the finished nose cover which was made by glueing a wooden dowel that was cut on an angle to the nose cover.

Now, I needed a way to create the eye lights behind the lens. Ultimately, these lights are used as an animatronic effect and are lit when the robot is speaking. In reality, I will have to place the head camera behind this lens as well so some compromises will have to be made to functionality. The camera itself will peep through a small hole on the right hand side of the lens.

Here bad fortune was turned to my advantage. A few weeks prior to working on this head, I accidentally dropped an exterior, LED porch light while trying to install it in the back yard and broke both its glass lenses in the process. I had to go buy another but I salvaged the white ultra bright tri-LEDs inside.

They run fine on 12 volt power and I had two of them. They were perfect for eyes. There are cheeper ways to get LEDs than destroying and expensive, outdoor light fixture, but it all worked out in the end. I built a light box out of white cardboard to house the new eyes. Here is shot of the finished light box.

When I test lit the eyes they looked pretty good. Note that I had to put translucent paper behind the red lens to diffuse the light. Here is what they looked like when I test lit them.

I have made even more progress over the past few months but this entry is getting a little long so I am going to wrap things up. Next up, I will talk about how I relocated the Raspberry PI into the head and installed the electronic antenna and camera into the head as well. For now I will leave you with one more head shot of the completed, telescoping head antenna.

Progress is slow but steady. I am starting to look forward to the software side of this project since the hardware's completion is finally in sight.

Thursday, October 2, 2014

Have Fun Getting Things Done

We all have things we want to improve on in our life. How often have you said things like, tomorrow, I will start my exercise program or my diet? When will I find time for the things I have to do and the things I enjoy doing? Even if you do start will you be able to convert these behaviors into permanent habits? What if there was a way to make being reminded about these things a little more fun while also providing you with the motivation you need to get them done?

Well, I would not be asking these questions if I did not want to talk about an application I came across that does exactly this, HabitRPG.

I have never been a big player of traditional low pixel resolution RPG games like Pokemon but I have played a lot of Everquest and Wow and one of the things these types of games can do is influence your behavior by introducing compulsive addictions which draw you back to the game again using virtual rewards as a motivator. This can be a hugely effective but can also be a huge waste of your productive time. This does not have to be the case if we could keep the motivational aspects of playing a game but tie the rewards to you getting things done in real life (IRL) instead.

HabitRPG has a simple system. Tell it the habits you want to encourage (like not smoking, if you smoke or drinking more water), The things you want to commit to every day called Dailies (Like getting out of your chair and getting some exercise) and your todo list of tasks you need to get done.

Once all this is entered, the game begins. You start a level one. You are given 50 hit points. If you loose all your hit points, you die. Now no one wants to die but if you don't start checking off tasks they will cause you damage and each day, your incomplete tasks will drain your hit points. How do you gain back your hit points? By leveling up your character. Each task you actually complete grants you (through your character) experience points and gold. Accumulate enough experience points and you will gain a level. Gain a level and you become completely healed. This cycle keeps you motivated as you build your character and gain levels. If you die, you loose a level after reading a disturbing dialog informing you of your death.

Wait a minute...did you say gold? Yes, in addition to building experience points, you also accumulate gold for completing tasks. Gold can be used as a reward since you can take things you enjoy doing and assign a value in gold to them allowing (or possibly reminding) you to reward yourself for all your hard work. Gold can also be used to buy gear to protect yourself from damage to your hit points or potions that will restore your hit points if you are near death.

One of my sons pointed out to me that you could cheat at this game by simply creating tasks and completing them for profit. This would be the equivalent of grinding in an MMO or an RPG. My response to this is why bother. The only person you are cheating is yourself. If you can't be honest in reporting your own accomplishments, how can this game help motivate you to get things done in the first place?

What about the stress or pressure you might start to feel if not finishing your dailies is rapidly killing you? Nobody needs to have panic attacks over an artificial motivation such as a fear of death in the game. The solution for this is simple- just check into the Inn. If you check in to the inn then you will not take any damage until you check out again. I died on my second weekend playing the game because all my daily tasked killed me because they were scheduled for every day of the week. Once I fixed this so that I only performed them during the work week things went much better for me. Going on vacation? Don't forget to check in to the Inn for this as well.

During my day job I usually plan my work week using another web based tool called JIRA and a plugin called JIRA Agile. This tool works great for team assignments, planning and todo list for a project but I have started using it in conjunction with HabitRPG. I find that the concept of dailies and habits and having a personal todo list works great in conjunction with my JIRA task lists (now if I only could arrange to get gold and experience points for closing out JIRA tasks!)

I am only at level five at this point but I think I am going to stick around. The rewards for leveling include access to a character class system where you can specialize take up such occupations as Warrior, Mage or Rogue. These classes give you access to special abilities which allow you to help yourself or others. Something I am just beginning to discover is the possibility of group play. The game has Groups and Guilds which allow you to tackle goals with a team of people.

At this point I have to ask myself, who should use this tool? I think anyone who needs a little help motivating themselves to get more done or to finally start doing some of the things they have been meaning to do forever should consider it. I am trying to introduce my kids to it but so far, no luck. Just imagine getting gold and experience for doing your homework.

It will be interesting to see how this application evolves. I have often wondered if there should be an extension of the site that actually lets you use your character in a real RPG environment. This would allow you to actually play your character in a game while building it during your day job. To my knowledge this does not exist yet but I think the idea has potential.

In case you are wondering, I have set up work on my robot as a reward. It costs me 10 gold but it is worth it.

Well, I would not be asking these questions if I did not want to talk about an application I came across that does exactly this, HabitRPG.

I have never been a big player of traditional low pixel resolution RPG games like Pokemon but I have played a lot of Everquest and Wow and one of the things these types of games can do is influence your behavior by introducing compulsive addictions which draw you back to the game again using virtual rewards as a motivator. This can be a hugely effective but can also be a huge waste of your productive time. This does not have to be the case if we could keep the motivational aspects of playing a game but tie the rewards to you getting things done in real life (IRL) instead.

HabitRPG has a simple system. Tell it the habits you want to encourage (like not smoking, if you smoke or drinking more water), The things you want to commit to every day called Dailies (Like getting out of your chair and getting some exercise) and your todo list of tasks you need to get done.

Once all this is entered, the game begins. You start a level one. You are given 50 hit points. If you loose all your hit points, you die. Now no one wants to die but if you don't start checking off tasks they will cause you damage and each day, your incomplete tasks will drain your hit points. How do you gain back your hit points? By leveling up your character. Each task you actually complete grants you (through your character) experience points and gold. Accumulate enough experience points and you will gain a level. Gain a level and you become completely healed. This cycle keeps you motivated as you build your character and gain levels. If you die, you loose a level after reading a disturbing dialog informing you of your death.

Wait a minute...did you say gold? Yes, in addition to building experience points, you also accumulate gold for completing tasks. Gold can be used as a reward since you can take things you enjoy doing and assign a value in gold to them allowing (or possibly reminding) you to reward yourself for all your hard work. Gold can also be used to buy gear to protect yourself from damage to your hit points or potions that will restore your hit points if you are near death.

One of my sons pointed out to me that you could cheat at this game by simply creating tasks and completing them for profit. This would be the equivalent of grinding in an MMO or an RPG. My response to this is why bother. The only person you are cheating is yourself. If you can't be honest in reporting your own accomplishments, how can this game help motivate you to get things done in the first place?

What about the stress or pressure you might start to feel if not finishing your dailies is rapidly killing you? Nobody needs to have panic attacks over an artificial motivation such as a fear of death in the game. The solution for this is simple- just check into the Inn. If you check in to the inn then you will not take any damage until you check out again. I died on my second weekend playing the game because all my daily tasked killed me because they were scheduled for every day of the week. Once I fixed this so that I only performed them during the work week things went much better for me. Going on vacation? Don't forget to check in to the Inn for this as well.

During my day job I usually plan my work week using another web based tool called JIRA and a plugin called JIRA Agile. This tool works great for team assignments, planning and todo list for a project but I have started using it in conjunction with HabitRPG. I find that the concept of dailies and habits and having a personal todo list works great in conjunction with my JIRA task lists (now if I only could arrange to get gold and experience points for closing out JIRA tasks!)

I am only at level five at this point but I think I am going to stick around. The rewards for leveling include access to a character class system where you can specialize take up such occupations as Warrior, Mage or Rogue. These classes give you access to special abilities which allow you to help yourself or others. Something I am just beginning to discover is the possibility of group play. The game has Groups and Guilds which allow you to tackle goals with a team of people.

At this point I have to ask myself, who should use this tool? I think anyone who needs a little help motivating themselves to get more done or to finally start doing some of the things they have been meaning to do forever should consider it. I am trying to introduce my kids to it but so far, no luck. Just imagine getting gold and experience for doing your homework.

It will be interesting to see how this application evolves. I have often wondered if there should be an extension of the site that actually lets you use your character in a real RPG environment. This would allow you to actually play your character in a game while building it during your day job. To my knowledge this does not exist yet but I think the idea has potential.

In case you are wondering, I have set up work on my robot as a reward. It costs me 10 gold but it is worth it.

Sunday, September 14, 2014

Getting A Head

I finally got around to building the head for my K9 prop/robot. I have been dreading this for a long time because of the rounded edges that are on the top, bottoms and sides of this piece. I knew there would be a lot of bending, scoring and clamping to make this happen. In the end, I was not 100% happy with the results. Let's start with a shot of the finished product.

Here is a shot looking down into the head from the top showing the bottom cross brace. This was after the brow cross brace and neck mount were inserted.

The goal so far with body construction has been sand and prime the outer shell and move on. I intend to do a final patch sand and paint job at the end of the project after all the equipment has been fitted inside.

At first I thought the head as too big but I have checked it against hero prop shots and the original plans and it's not. The production designers actually made the head bigger in later versions of the prop. A fact I picked up while researching what I thought was a problem. It's a good thing it's big too because it needs to hold animatronics for its ears and nose and a thermal printer. I have also decided to move the raspberry PI and external speakers into the head as well to shorten the distance between it and the camera. The head will probably be more complex than the entire body.

Now let's talk about construction. I started by gluing the bottom plate to the sides and then gluing a cross brace at the brow and the back of the head.

After clamping, I countersank wood screws on both sides of the cross braces. I later had to add a cross brace on the bottom because there was to much stress for glue alone to hold it together.

Here is a shot looking down into the head from the top showing the bottom cross brace. This was after the brow cross brace and neck mount were inserted.

It is defiantly at an angle. His was because I adjusted it to try and change the shape of the head because it was a little mis-shapen without cross braces to hold it in place. Also shown here is a cross brace in the back of the head to allow the curved plate of wood in the back to be bent around it.

The back plate had an almost circular curve to it. This is not easy to do with wood. I was thinking of using water to soften it but decided to score the back of it every inch and a half to make it more pliable.

In the end this loosened it just enough to allow it to curve around the back just enough to fit. After this plate was attached I covered over all the screws with wood putty and gave everything a priming and sanding.

This left only the top of the nose and the head without wood. These will form the removable access doors. I will also have to cut additional compartments for speakers, printer controls and a temperature sensor. They are all going to be mounted on the underside as shown below.

I have fitted the head into place on the body to get a better idea how I am going to permanently mount it. During this process I discovered that once the head was installed, I could not longer use the remote control to move the robot forward. Its front mounted ultrasonic range finders kept reporting that there was an object about 8cm in front of it and it would not allow me to move it forward no matter what I did. I assumed that I had damaged the sensor while installing the head so I removed it.

Once I removed it, I could now move it by remote control. Unfortunately, without this sensor to stop it, once I started testing it, it ran out of control and broke its neck, causing the head to sag but not come completely off. This is not my first setback during this project but it was the worst since the fire in the dorsal light control board. It was after this accident that I realize that the ultrasonic range sensor was detecting the robots own head as an obstacle. Once I moved the head up and out of the way, the range finder began working again. Pity I had to remove it and bench test it to find this out. I should have realized it when the problem first began.

Well this entry probably ran on too long so I will end it with a shot of K9 with his head (before it got broken) sitting next to Appa, the beagle. Next time I will talk about installing equipment into the newly build head.

I have fitted the head into place on the body to get a better idea how I am going to permanently mount it. During this process I discovered that once the head was installed, I could not longer use the remote control to move the robot forward. Its front mounted ultrasonic range finders kept reporting that there was an object about 8cm in front of it and it would not allow me to move it forward no matter what I did. I assumed that I had damaged the sensor while installing the head so I removed it.

Once I removed it, I could now move it by remote control. Unfortunately, without this sensor to stop it, once I started testing it, it ran out of control and broke its neck, causing the head to sag but not come completely off. This is not my first setback during this project but it was the worst since the fire in the dorsal light control board. It was after this accident that I realize that the ultrasonic range sensor was detecting the robots own head as an obstacle. Once I moved the head up and out of the way, the range finder began working again. Pity I had to remove it and bench test it to find this out. I should have realized it when the problem first began.

Well this entry probably ran on too long so I will end it with a shot of K9 with his head (before it got broken) sitting next to Appa, the beagle. Next time I will talk about installing equipment into the newly build head.

Sunday, July 27, 2014

LCD Display and Tail Rig Installed

Its been a while since I updated. This is because progress is slow. I work on new hardware when I can. There have been significant improvements and I will summarize some of them with some progress pictures.

First off, my family has added a new real living dog to the crew. His name is Appa after the sky bison from Avatar: The Last Airbender. Here is a picture...

The web interface is coming along nicely too. K9 runs a python based web server (Flask) and exposes all of his functions for debugging and testing through it. This has been invaluable as a testing tool, allowing me to debug the tail motor and dorsal lcd display. Here are a few shots of the web interface which is a work in progress, of course.

Moving on, I have finally gotten around to installing the LCD composite monitor on the left side of the shell. This is very handy because I can actually watch the boot-up process and know what is happening when things go wrong.

I still have the trim and an acrylic cover for this display on order which really needs to be installed so it will not look so obviously like a car dvd player. It still comes in really handy. Here is a video which shows off the display and the ability to play sounds from the web interface.

First off, my family has added a new real living dog to the crew. His name is Appa after the sky bison from Avatar: The Last Airbender. Here is a picture...

He is into everything and he is not sure what to make of K9. At least he has not decided to chew on him yet. Now back to what progress I have made.

In my last entry I had started working on the tail servo rig. This is now completed and I have a wagging tail that can be controlled through the web interface. Here is a video of the installed tail rig in operation.

The web interface is coming along nicely too. K9 runs a python based web server (Flask) and exposes all of his functions for debugging and testing through it. This has been invaluable as a testing tool, allowing me to debug the tail motor and dorsal lcd display. Here are a few shots of the web interface which is a work in progress, of course.

Moving on, I have finally gotten around to installing the LCD composite monitor on the left side of the shell. This is very handy because I can actually watch the boot-up process and know what is happening when things go wrong.

I still have the trim and an acrylic cover for this display on order which really needs to be installed so it will not look so obviously like a car dvd player. It still comes in really handy. Here is a video which shows off the display and the ability to play sounds from the web interface.

So everyone asks me, "That's great but when are you going to build the head?" Well its all about parts and time. I have the wood cut for the head but I don't want to assemble it until I at least get the printer that I am going to install in it delivered. I want to make sure I leave enough room inside the head to mount the printer. The head will have multiple servos inside it to control the ears, telescoping antenna and nose gun and will have to be constructed around these systems. Still, its next on the list and I need to get started on it.

I always like to post other K9 builds I come across. Here is one that I came across at Philadelphia Comicon this year (2014). I don't think it ever moved on its own and it obviously has been around (just look at the scratches and dents) but it is always interesting to see what other people have done.

Its a good reminder of how far I still have to go on this project to complete the hardware. After that, hopefully I will have even more work ahead of me writing software to allow it some autonomy from its own remote control. That's it for now, I will try to post some more design details and pictures as I start construction of the head.

Sunday, June 1, 2014

A Dog's Tail - Pitch and Yaw on the Cheap

I don't have the parts or tools to build rigs out of aluminum or steel but I need to figure out how a set of servos can control K9's tail. Wood and plastic will have to do for now until those parts break or wear out. The tail essentially is a pole that must be able to move horizontally and vertically by motor control. Here is a shot of the actual tail on the prop itself.

Fortunately, there are a few good examples already out there on how to build this motion rig. For example, here is one using only DC motors. Here is another example using Servo motors which is very impressive. This rig is built with multiple aluminum C-brackets and struts which looks very sturdy but I have no idea where to get the parts. So far I have built a test rig consisting of two RC aircraft 180 degree servo motors connected together, one to shaft of the other as seen in this example I found for sale.

Incidentally, if you are looking for the rubber fixture I am going to use, its a CV-Boot from a car. They can be found in almost any auto supply store. Here is a picture of the one I chose.

It is a close enough match to get the job done. I will end up cutting away the lower half when I mount it to the body. Now back to the test rig. Below is a video of the test rig I built just to see if my two server idea would work. The two servos are joined together with rubber bands so you will see a lot of oscillation as the arm moves around and a hanger is standing in for the antenna but other than that, it is performing the required motions.

Once I replace the rubber bands with firmer connections, all shaking will go away and I will have the wagging motions I need without having to construct a more complex rig. How long it will last in action will be another matter. Everything is being driven from an Arduino that is not connected to the main robot. This is just for testing purposes. The next step will be to move everything to a permanent rig that I can attach to the CV-Boot inside the main shell. I will post pictures of that when that is completed.

Fortunately, there are a few good examples already out there on how to build this motion rig. For example, here is one using only DC motors. Here is another example using Servo motors which is very impressive. This rig is built with multiple aluminum C-brackets and struts which looks very sturdy but I have no idea where to get the parts. So far I have built a test rig consisting of two RC aircraft 180 degree servo motors connected together, one to shaft of the other as seen in this example I found for sale.

Incidentally, if you are looking for the rubber fixture I am going to use, its a CV-Boot from a car. They can be found in almost any auto supply store. Here is a picture of the one I chose.

It is a close enough match to get the job done. I will end up cutting away the lower half when I mount it to the body. Now back to the test rig. Below is a video of the test rig I built just to see if my two server idea would work. The two servos are joined together with rubber bands so you will see a lot of oscillation as the arm moves around and a hanger is standing in for the antenna but other than that, it is performing the required motions.

Subscribe to:

Posts (Atom)